TechEthos Holds Final Policy Event on Ethics for the Green and Digital Transition

On 14 November, the Horizon 2020-funded TechEthos project held its final policy event in Brussels to discuss the role of ethics in the green and digital transitions for an audience of researchers, policymakers, and the wider community.

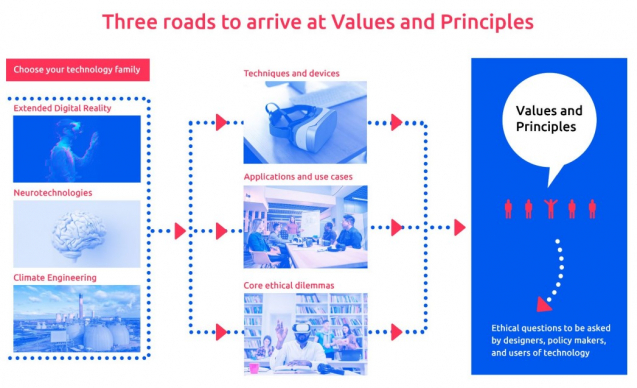

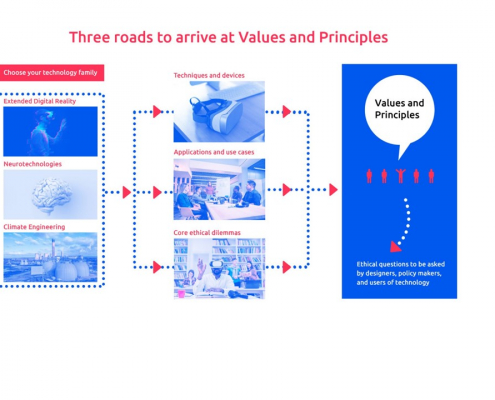

Since 2020, an EU-funded consortium led by the Austrian Institute of Technology (AIT) has been exploring the societal and ethical impacts of new and emerging technologies. The TechEthos project aims to facilitate “ethics by design”, i.e., it advocates to bring ethical and societal values into the design and development of technology from the very beginning of the process.

The policy event, which signals the end of the project in 2023, was hosted by Barbara Thaler, Member of the European Parliament (MEP) and member of the Panel for the Future of Science and Technology (STOA). The event highlighted ongoing ethical debates, as well as current and expected EU policy debates such as the proposed AI Act, the implementation of the Digital Services Act and Digital Markets Act, the European Green Deal, and the European Commission’s proposal for a Carbon Removal Certification Framework.

In his opening statement, Mihalis Kritikos, Policy Analyst at the European Commission’s Directorate-General for Research and Innovation (DG RTD), stressed that ethically designed policies are essential for public acceptance of new technologies, and ultimately for a just digital and green transition.

His remarks were complemented by TechEthos Coordinator, Eva Buchinger, who provided an overview of how TechEthos addresses possible concerns from society related to new and emerging technologies using an approach that combines scanning, analysing and enhancing existing frameworks and policies. The key messages are condensed in a number of TechEthos policy briefs, which are available here.

Ethics for the digital transformation

In the first keynote of the day, Laura Weidinger, Senior Research Scientist at Google DeepMind, discussed different approaches to socio-technical safety evaluation of generative AI systems to explore how we can ensure that models like ChatGPT are safe to release into society. At an early developmental stage, it can be challenging to predict a technology’s capabilities, how it will be used, and its impact on the world.

Most of the current safety evaluations and mechanisms focus on fact-checking the direct capability of language models, i.e., whether the information they generate is accurate. However, the believability of such information, as well as its impact on society, remain largely understudied. Weidinger advocated for an ethics evaluation framework with a clear division of roles and responsibilities, where model-builders carry the main responsibility for capability testing, application developers for studying their use, and third-party stakeholders for looking at systemic impact.

A panel consisting of Laura Weidinger, Alina Kadlubsky (Open AR Cloud Europe), and Ivan Yamshchikov (CAIRO – the Center for Artificial Intelligence and Robotic), introduced by Alexei Grinbaum (CEA – the French Alternative Energies and Atomic Energy Commission & TechEthos partner), dived deeper into the ethical, social, and regulatory challenges of Digital Extended Reality and Natural Language Programming.

The panel reflected on key ethical issues related to emerging technologies, including transparency (should AI-generated content always be marked as such, and is this sufficient for users to process it accordingly?), accountability (how do we balance the responsibilities of users and developers and can real-world values and regulations be translated into the metaverse?), and nudging/manipulation (what should be permitted, and when does it serve the benefit society?).

Ethics for the green transition

In the afternoon, Behnam Taebi, Professor of Energy & Climate Ethics at Delft University of Technology, gave a keynote lecture on the governance and ethical challenges of emerging technologies for the green transition. Climate Engineering technologies continue to be controversial, and some researchers have even called for a complete ban on research in this area. This is largely due to substantial possible risks (e.g., ozone depletion, negative impacts on agriculture, and many as yet unknown risks), as well as regulatory complexity due to their international and intergenerational nature.

However, these technologies are increasingly being considered essential to cap global warming at 1.5 degrees, meaning that an ethically-informed future governance framework will be needed. Taebi emphasised that these technologies (and their potential and risks) continuously evolve, and so do public perception and moral beliefs. Therefore, a dynamic ethical assessment will be required to make regulatory frameworks fit for the future.

The lecture was followed by a panel discussion with Behnam Taebi, Dušan Chrenek (European Commission, DG Climate Action), and Matthias Honegger (Perspectives Climate Research), introduced by Dominic Lenzi (University of Twente & TechEthos partner), which provided further insights into the ethical, social, and regulatory challenges related to Climate Engineering .

The panel concluded that any technological opportunities that contribute to climate change mitigation should be explored. However, they emphasised that it is important to acknowledge that different technologies (i.e., carbon dioxide removal v. solar radiation modification) have very different societal implications, hugely diverse risk profiles, and unique intellectual property challenges, and should therefore be subject to tailored ethical analyses and regulatory frameworks.

Highlights and outlook for the ethical governance of emerging technologies

In the final session, Maura Hiney, Chair of the ALLEA Permanent Working Group on Science & Ethics, placed TechEthos’s outcomes in the larger context of the recently revised ALLEA Code of Conduct for Research Integrity, and reiterated the requirement for suitable research integrity frameworks to guide researchers that work on emerging technologies.

To conclude the event, Eva Buchinger, Laurence Brooks (University of Sheffield), and Renate Klar (EUREC – European Network of Research Ethics Committees) shared their views and insights on the continuation and implementation of the work beyond the lifetime of the TechEthos project.

The report serves to review these legal domains and related obligations at international and EU levels, identifies the potential implications for fundamental rights and principles of democracy and rule of law, and reflects on issues and challenges of existing legal frameworks to address current and future implications of the technologies. The 242-page report covers human rights law, rules on state responsibility, environmental law, climate law, space law, law of the seas, and the law related to artificial intelligence (AI), digital services and data governance, among others as they apply to the three technology families.

The report serves to review these legal domains and related obligations at international and EU levels, identifies the potential implications for fundamental rights and principles of democracy and rule of law, and reflects on issues and challenges of existing legal frameworks to address current and future implications of the technologies. The 242-page report covers human rights law, rules on state responsibility, environmental law, climate law, space law, law of the seas, and the law related to artificial intelligence (AI), digital services and data governance, among others as they apply to the three technology families.