“Questions, Not Answers, Are Better Suited to Start a Reflection on Ethical Issues”

Technology has immense power to shape our world in a variety of spheres, from communication to education, work, health, transportation, climate, politics, and security. New and innovative technologies with such gross potential for wide socio-cultural and economic impact (often referred to as “emerging technologies”) are thus often fraught with ethical questions – which range from concerns about privacy breaches to manipulation, fairness, and the exacerbation of power gaps and exploitation. Because they could affect every aspect of our lives, it is important to acknowledge and address these ethical questions right at the outset – as early in the process of technological design and implementation.

In this relatively nascent field of emerging technologies and ethics, TechEthos (Ethics for Technologies with High Socio-Economic Impact), a Horizon 2020-funded project, published a report on the ethical issues that need to be considered for three technology families: Digital eXtended Reality, including the techniques of visually eXtended Reality (XR) and the techniques of Natural Language Processing (NLP), neurotechnologies, and climate engineering, including Carbon Dioxide Removal (CDR) and Solar Radiation Management (SRM).

Dr Laurynas Adomaitis, Tech Ethicist, CEA

In this Digital Salon interview, we speak with the lead author of the report, Dr Laurynas Adomaitis, Tech Ethics Researcher at Commissariat à l’Énergie Atomique et aux Énergies Alternatives (CEA), on the ethical dilemmas inherent to emerging technologies, how researchers can effectively use the tools in the report, and the role for policymakers and funding organisations in promoting the integration of ethics into every stage of technology research.

Question: Are the core ethical dilemmas in emerging technologies fundamentally similar to ethical considerations inherent to all research? How are they different?

Laurynas Adomaitis: Emerging technologies are often based in research, so there definitely is overlap between the core dilemmas we discuss in research ethics. For example, while looking at climate engineering, we discovered that one point of contention was whether research into Solar Radiation Management (reflecting/refracting solar energy back into space) is ethically justified. One of the arguments against it is that researching such techniques presents the world with a “plan B”, which may distract from climate change mitigation efforts.

We also found a lot of issues with consent in XR (extended reality) and neurotech, which cuts across research ethics. For example, there are ethical concerns with so-called “deadbots” – chatbots constructed based on conversational data from deceased individuals. How is consent possible for an application that did not exist when the person was conscious? Likewise, in neurotech we must be aware of changing people’s mental states. For example, sometimes a treatment is required before consent can be given, but then can it be revoked by the patient? Or, if a BCI (brain-computer interface) changes a person’s mental states, can it also change how they feel about consent?

“Each technology family has many issues and at least one beastly challenge to conquer.”

Q: Which of the three technology families did you find particularly fraught with ethical issues? Why?

LA: The three technology families – XR, neurotech, and climate engineering – are at very different stages of development. Many applications in XR are already in production and available to the public; neurotech is starting in medical tests but is mainly based on future promise, whereas climate engineering is only beginning to be explored with huge issues on the horizon.

Each technology family has many issues and at least one beastly challenge to conquer. For climate engineering, it’s irreversibility – can we make irrevocable changes to the planet? For neurotech, it’s autonomy – how can we enhance cognitive abilities, while respecting independent and free thinking? For XR, it’s a set of particular issues, like nudging, manipulation, deep fakes, concerns about fairness, and others. I think it’s a wider array of issues for XR because it is already hitting the reality of implementation, where many practical problems arise. There are even skeptical researchers who think that virtual realities should not exist at all because of the moral corruption they may cause, especially with children. This fundamental issue still lingers spurring the need for empirical studies.

Q: What were some overarching ethical themes common to all three technology families?

LA: There are cross-cutting issues that relate to uncertainty, novelty, power, and justice. But the most important aspect that kept reappearing was the narratives about new technologies that are found in lay reactions to it.

We used a framework to elucidate this in the report that was developed in the DEEPEN (Deepening ethical engagement and participation in emerging Nanotechnologies) project over 10 years ago. It worked very well in the context of our ethical analysis. Many concerns were along the lines of five tropes of lay reactions to novelty: “Be careful what you wish for”, based on the motifs of exact desire and too big a success; “Messing with Nature”, based on the motifs of irreversibility and power; “Opening Pandora’s box”, based on the motifs of irreversibility and control; “Kept in the dark”, based on the motifs of alienation and powerlessness; and “The rich get richer, the poor get poorer”, based on the motifs of injustice and exploitation. Although these reactions are natural, and sometimes justified, we had to keep asking ourselves whether they are the most pressing ones. It’s still astonishing that the same narratives apply across times and technologies.

“There are cross-cutting issues that relate to uncertainty, novelty, power, and justice. But the most important aspect that kept reappearing was the narratives about new technologies that are found in lay reactions to it.”

Source: TechEthos Report on the Analysis of Ethical Issues

Q: How can the research community best implement the tools/findings in this report?

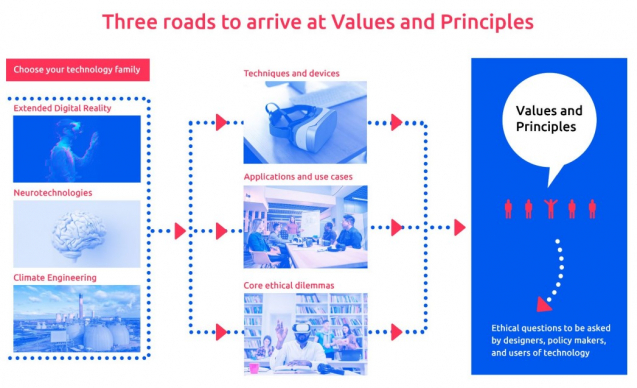

LA: The report is structured in a hierarchical way, starting with some core dilemmas that are the foundation of reasoning, then there are applications and, finally, values and principles. The value sections are the most important for researchers and practitioners. They cover the key considerations, and each value section ends with a set of questions. We wrote these questions with a researcher in mind. What should one consider when trying to explore, design, and implement the technology? What are the checks and balances with respect to the value in question? We intended these questions to be operationalisable so they offer the best value for implementation.

Q: How can policymakers better support the integration of “ethics by design” in emerging technologies?

LA: Technology research should be in step with ethical research on the technologies. The time difference between the development in tech and ethical or policy research creates a divide, where we have to work retroactively, and it’s very inefficient. Imagine if carbon-intensive technology and industry were developed alongside climate preservation from the very beginning. Of course, there have been philosophers and ethicists, like Hans Jonas, as early as the 1970s calling for ecological activism and responsibility for future generations. But they were mavericks and pioneers, working with passion but without support. We should try to open up these perspectives and take them seriously at the policy level when the technologies are emerging.

“Technology research should be in step with ethical research on the technologies. The time difference between the development in tech and ethical or policy research creates a divide, where we have to work retroactively, and it’s very inefficient.”

Q: What role can funding organisations play in centering ethics in emergent tech?

LA: It’s a difficult question to answer since causality is very uncertain in provoking ethical reflection. Ethical reflection is, as we like to call it, opaque. It’s not always transparent when it happens or why. What will actually cause people – researchers and industry alike – to stop and reflect? In our report, we avoided guidelines or directives that would offer “solutions”. Instead, we focused on questions that should be asked. Questions are better suited for starting a reflection on ethical issues. For example, if you’re building a language model, how will it deal with sensitive historical topics? How will it represent ideology? Will it have equal representation for different cultures and languages?

There is no “one way” to address these challenges, but the questions are important and researchers should at least be aware of them. If the standards for dealing with them are not clear yet, I would prefer to see each research project find their own way of tackling them. That will lead to more original approaches and, if a working consensus is found, standardisation. But the central role played by the funding bodies could be to guide the researchers into the relevant questions and start the reflection. We intended our report to provide some instruction on that.

You can read our summary of the TechEthos report by Dr Adomaitis on the analysis of ethical issues in Digital eXtended Reality, neurotechnologies, and climate engineering here, and the full report here.

TechEthos is led by AIT Austrian Institute of Technology and will be carried out by a team of ten scientific institutions and six science engagement organisations from 13 European countries over a three-year period. ALLEA is a partner in the consortium of this project and will contribute to enhancing existing legal and ethical frameworks, ensuring that TechEthos outputs are in line with and may complement future updates to The European Code of Conduct for Research Integrity.